research stories

Event-Based Vision

Cheng Gu, Erik Learned-Miller, Daniel Sheldon, Guillermo Gallego, Pia Bideau

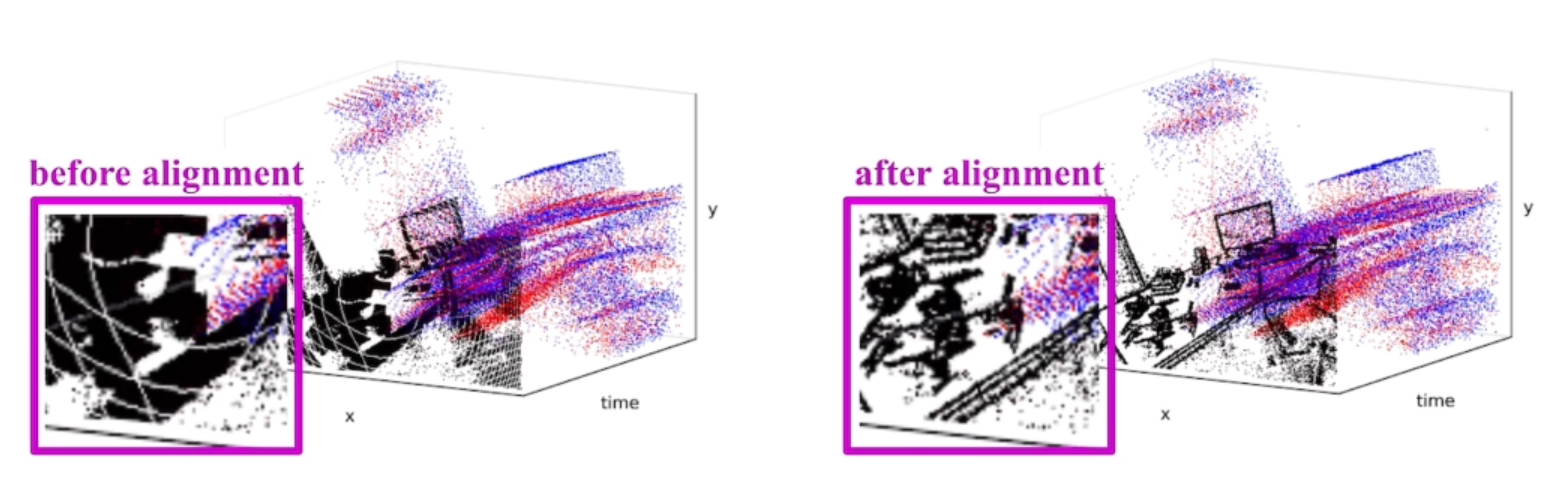

Event cameras, inspired by biological vision systems, provide a natural and data efficient representation of visual information. Visual information is acquired in the form of events that are triggered by local brightness changes. However, because most brightness changes are triggered by relative motion of the camera and the scene, the events recorded at a single sensor location seldom correspond to the same world point. To extract meaningful information from event cameras, it is helpful to register events that were triggered by the same underlying world point. In this work we propose a new model of event data that captures its natural spatio-temporal structure. We start by developing a model for aligned event data. That is, we develop a model for the data as though it has been perfectly registered already. In particular, we model the aligned data as a patio-temporal Poisson point process. Based on this model, we develop a maximum likelihood approach to registering events that are not yet aligned. That is, we find transformations of the observed events that make them as likely as possible under our model. In particular we extract the camera rotation that leads to the best event alignment. We show new state of the art accuracy for rotational velocity estimation on the DAVIS 240C dataset. In addition, our method is also faster and has lower computational complexity than several competing methods.

Fixation-Based Active Vision

As humans, we fixate our gaze without conscious notice. Observe what you do when you look out a moving train window. You would fixate on a lamp post or a tree or a passing vehicle. It turns out this seemingly benign act of fixating makes understanding the 3D world very easy. We show how when a robot mimics this behavior, it is able to robustly interact with the world only through a 2D camera (such as a webcam) without building any sophisticated world model.

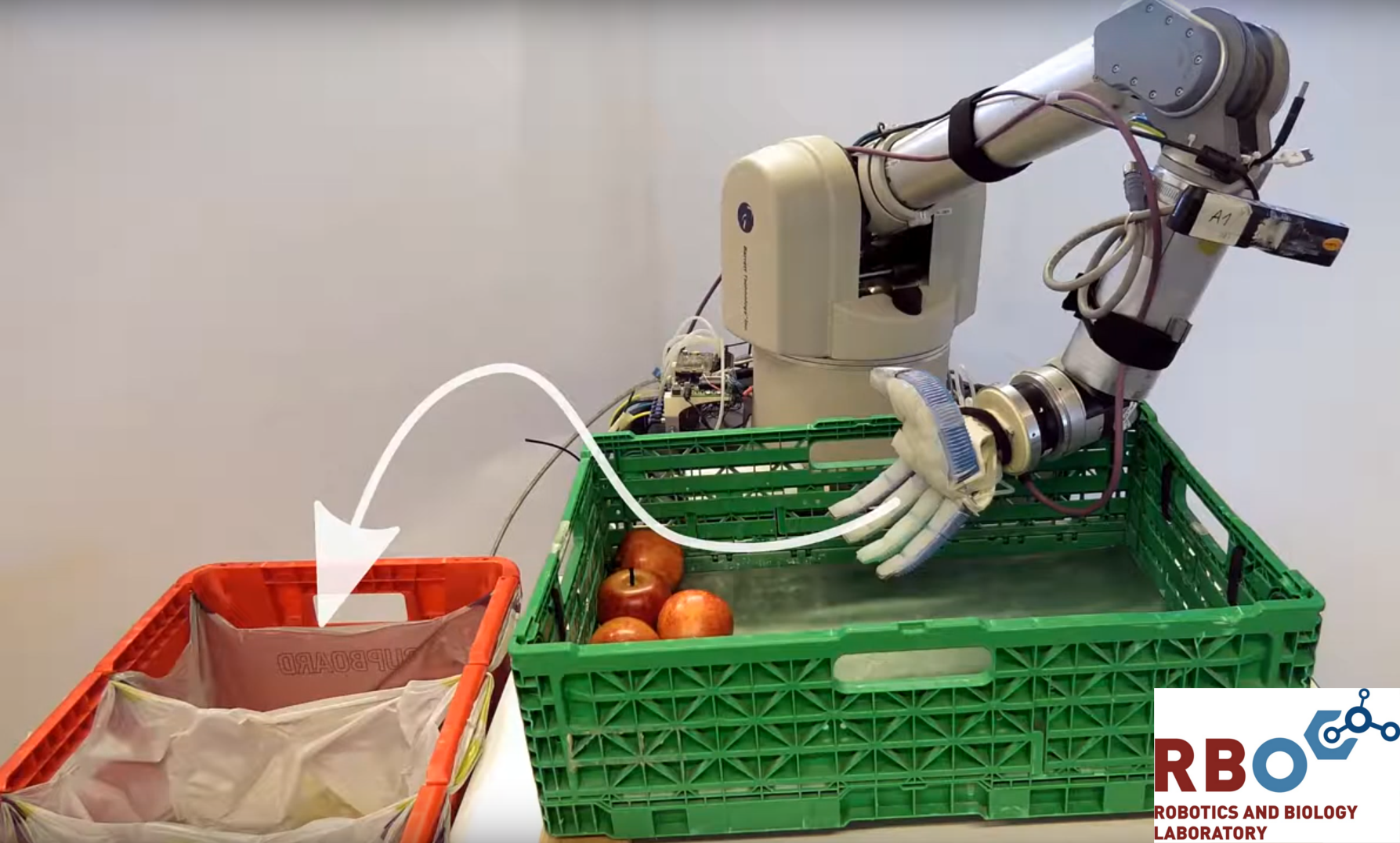

Human-Like Grasping From Piles

Humans grasp objects with incredible ease. We can easily pick up one popcorn from a bowl or a single nut from a container, even without looking while watching a movie. This may sound spurring at first if we consider the chaotic motion of popcorn in a pile. Humans developed skills and engineered their environment to simplify their lives. So we need less concentration and sometimes grasp even without looking.

To transfer human skill to a robot, we need to understand what phenomena humans leverage during grasping. For example, we can study a pile’s motion when grasping from it and extract a pattern that emerges consistently. We identified a pattern in piles and transferred a human-like grasping skill that uses this pattern. Our grasp strategies, just like humans, doesn’t require a camera to see individual objects in a pile to grasp an object.

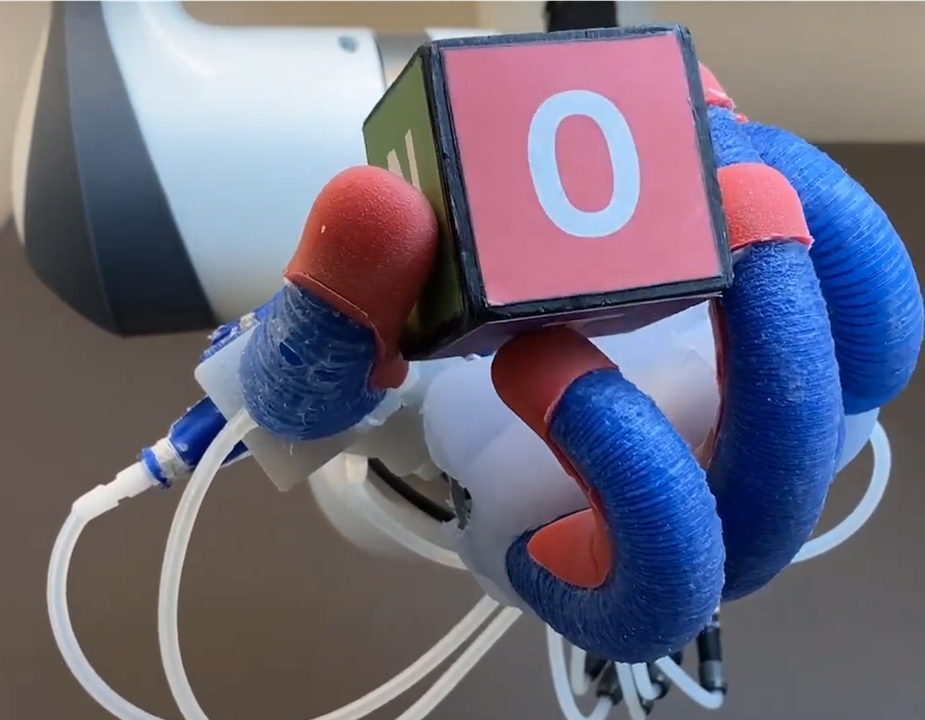

In-Hand Manipulation with Dexterous Soft Hands

In-hand manipulation is among the hardest problems in robotics. Even subtle finger movements can make or break contact, which can result in an inability to grasp the object and drop it. This complicates the control of dexterous robot hands, as actions must be chosen wisely. This view has dominated manipulation research in recent decades and applies to rigid and stiff hands, where every movement of the hand is a consequence of the underlying control algorithm. Dexterous soft hands mark a paradigm shift in this area. Because of their compliant properties, these hands are able to absorb the complex contact dynamics that make life difficult for researchers working with rigid hands. Such systems naturally dampen impacts, balance contact forces and provide large contact patches for stable grasping and manipulation. In our research on soft in-hand manipulation, we want to understand the perspectives that open up when we leave these aspects entirely to the morphological properties of the hand.

A Promising Principle of Intelligence from Robotics

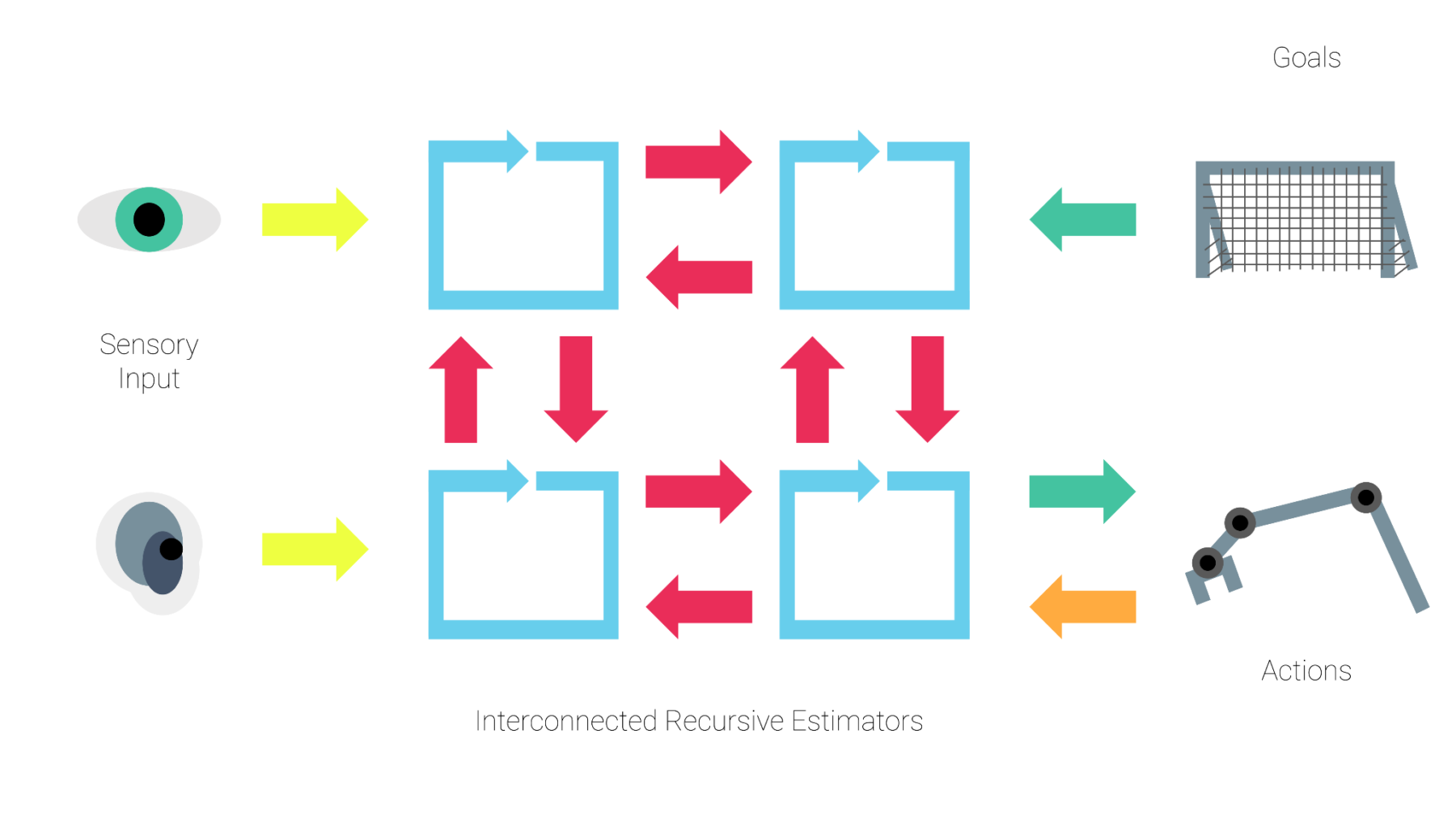

Whenever we talk about intelligent behavior of organisms, it arises from a mapping of their sensory inputs to suitable actions. However, performing this mapping is extremely hard. On the one hand, the sensory input is very high dimensional – for example, one human eye alone has over 100 million sensory receptors. On the other hand, one has to choose the right action to achieve complex goals given only uncertain estimates of the environment. Drawing from previous robotics research, we propose a computational principle to perform this mapping by extracting the task relevant information from the sensory input and generating suitable actions in an integrated manner.

The computational principle consists of three building blocks: recursive estimators, interconnections, and differentiable programming. Recursive estimators are the tool to extract information about the state of the world, integrating information from observations and our actions. We interconnect multiple of these recursive estimators to extract more complex information while also making the perception more robust, comparing new observations to our current belief. We use this robust system for perception to also generate reasonable actions using differentiable programming: It allows us to automatically determine how the environment might change given different actions, thereby enabling us to come up with the best actions to achieve our goals.

Since we expect that this computational principle could explain a wide variety of intelligent behaviors, we propose it as a more general principle of intelligence. In this project, we therefore study it in a variety of different intelligent behaviors in collaboration with the interdisciplinary research teams at Science of Intelligence.

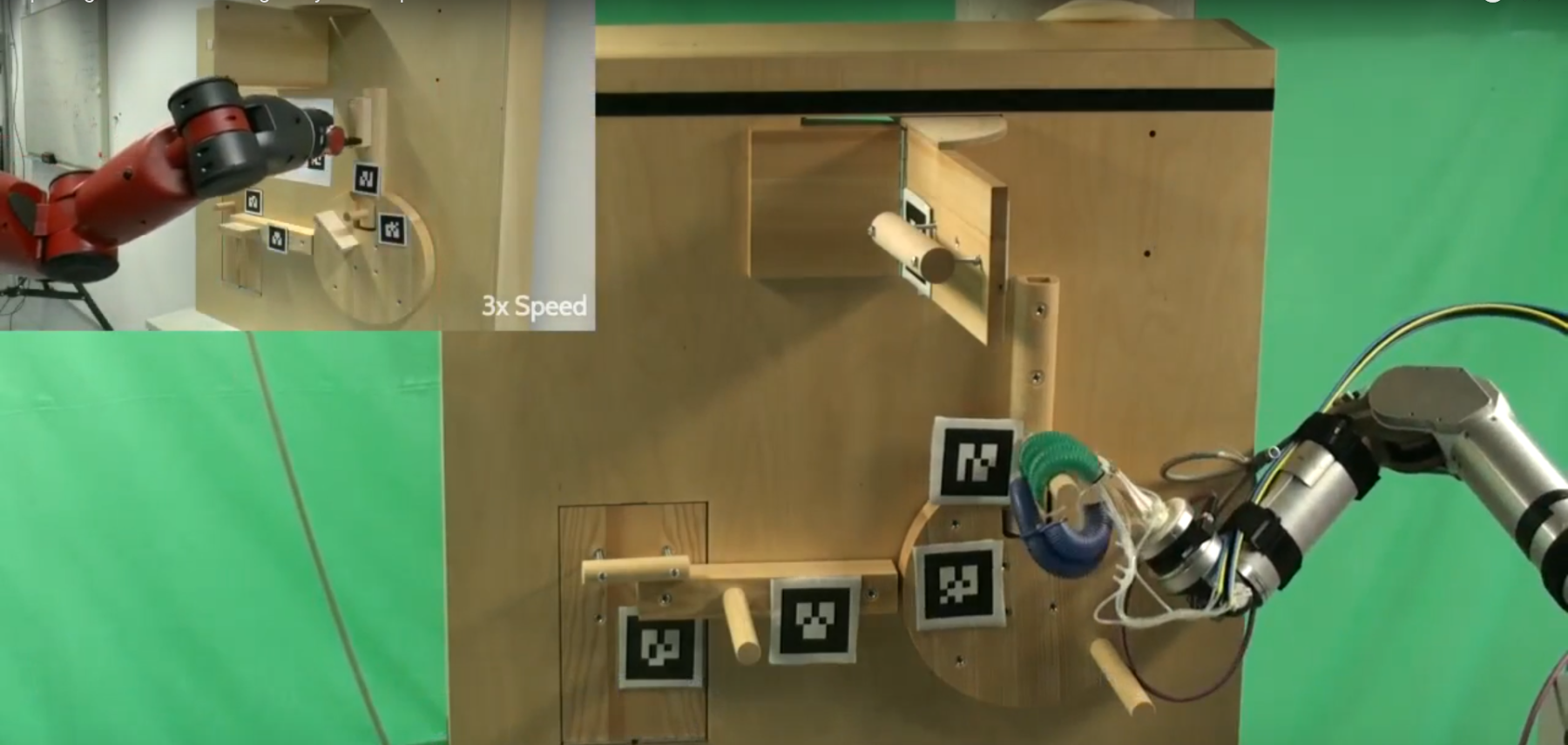

Learning Task-Directed Interactive Perception Skills

If we want robots to assist us in our everyday environments, then they need to understand the different mechanisms that surround us. These mechanisms include kinematic joints in furniture and tools like scissors, staplers or laptops. It’s difficult or even impossible to understand the kinematics of an environment just from passive observation, this is why robots need to interact with their surroundings to extract useful information that they can use to build models. In my research I am interested in learning such interactive perception skills. This research topic is interesting as it combines the areas of perception, control, learning and others under a unifying theme.

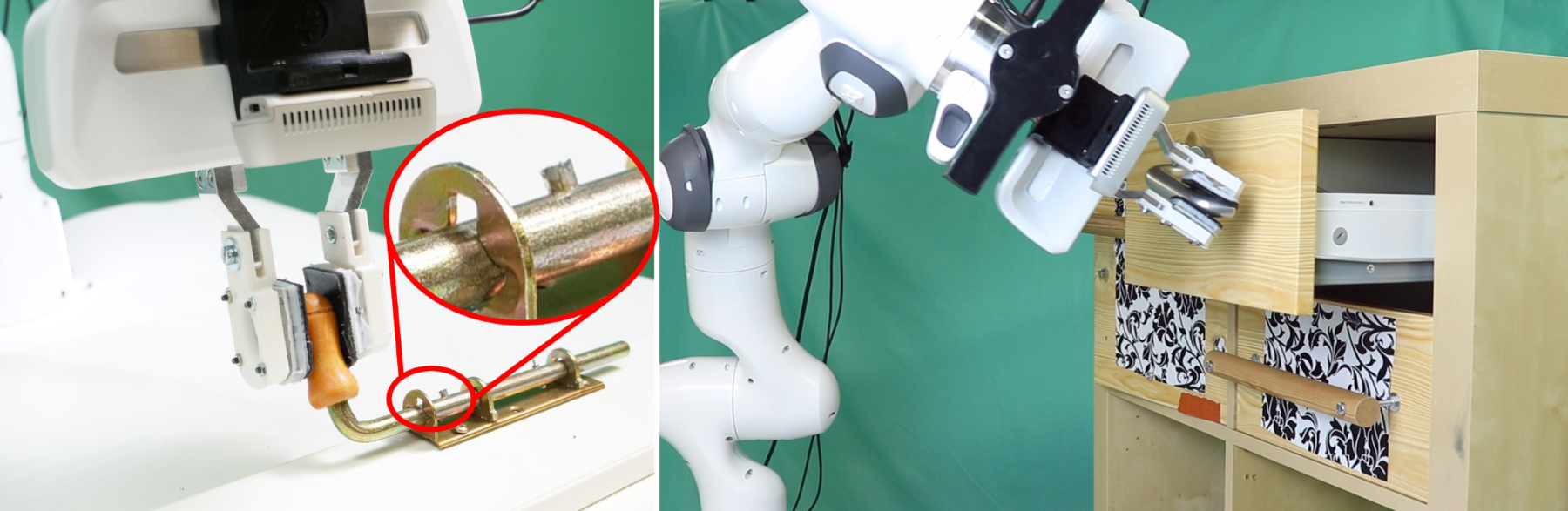

Learning from Demonstration by Exploiting Environmental Constraints

In this project, we will develop a system that allows a robot to acquire new skills by imitating a user to solve an escape room task. We observe that humans exploit extensively the environment through physical contact to ease various manipulation tasks. As an example of inserting a peg into a hole, a human might tilt the peg, partially insert the tilted peg into the hole, and then complete the insertion by compliantly letting the environment (i.e., the hole) guide the peg towards its goal. We should not ignore this insight when attempting to learn manipulation skills from human demonstrations. Motivated by that, we devise a novel approach to Learning from Demonstration using environmental constraints as the underlying representation. This approach generalizes from a single human demonstration of a task using an articulated object (such as locks, doors, drawers) to other objects with similar but not identical kinematic structures, different sizes, and different object placements.

Rational Selection of Exploration Strategies in an Escape Room Task

In recent years, robots have gained increasing abilities to solve complex tasks that were once thought to be achievable only by humans. Two properties that robots have not mastered yet are flexibility and adaptivity. Current robots show weak performance when dealing with tasks with variable properties or in changing environments. One model that aims to explain these two properties in humans is the multi-strategy model, also called the Brain’s Toolbox. This model assumes that intelligent agents have a variety of strategies and a selection mechanism that selects the most appropriate strategy to solve a given problem. When making a decision, the selection mechanism evaluates for each strategy two properties that are traded off: the utility of the strategy for the given task and the cost of implementing that strategy. Thus, to make an optimal strategy choice, an agent must identify the correct environmental cues and use them to properly assess utility and costs.

In our research, we aim to understand the multi-strategy model and assess its contribution to increasing flexibility and adaptability in robotic problem-solving.

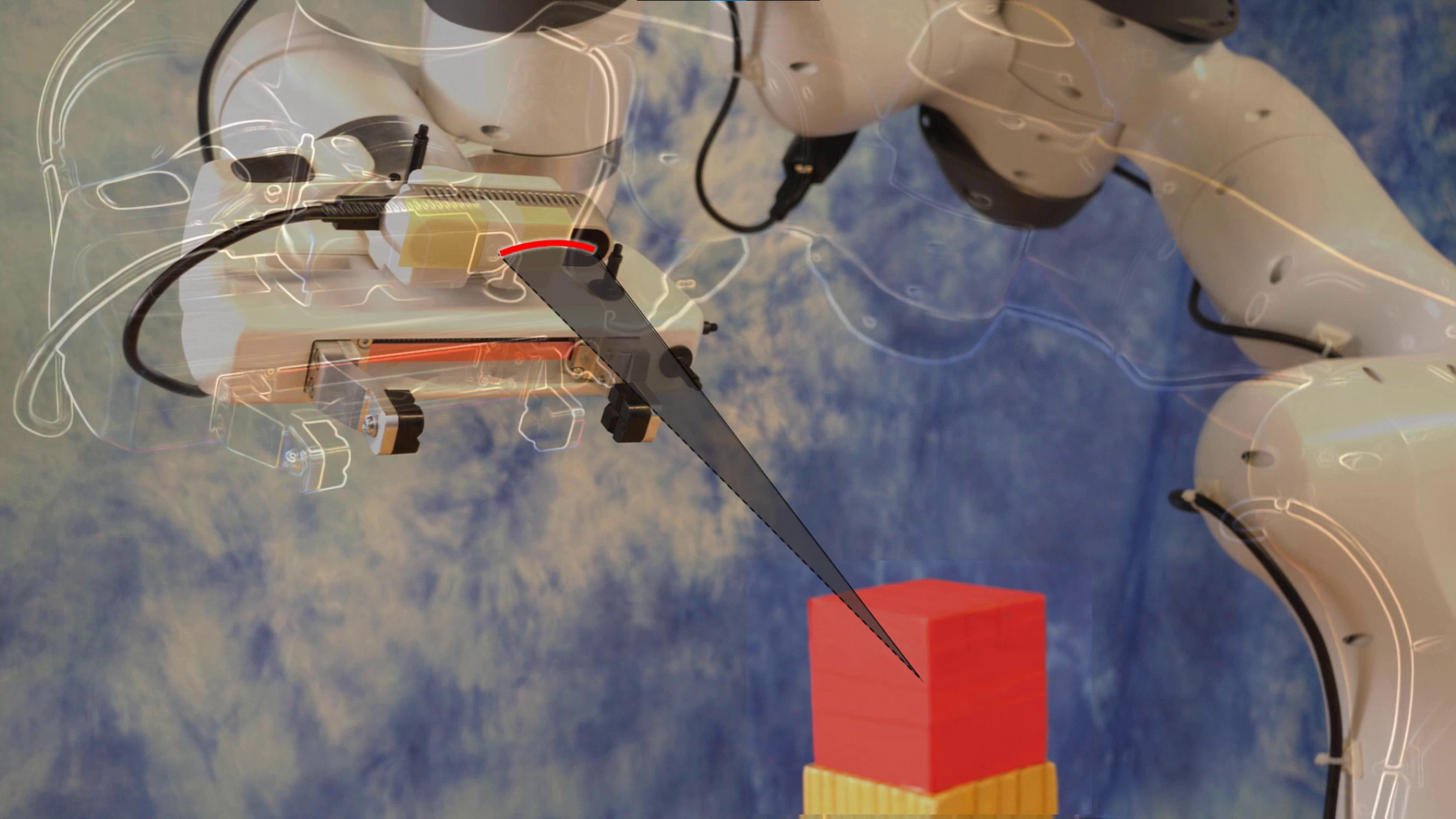

Self-Supervised Learning of Visual Representations for Environmentally Constrained Grasping Strategies

Pia Bideau, Oliver Brock, Rosa Wolf, Clemens Eppner

Environment-constraint grasping strategies exploit the tightly coupled interaction between object, environment and agent. Prior to any interaction with the environment humans can easily extract such contextual information from visual cues. How can we learn a compact, but rich spatio-temporal representation of object and environment that allows the agent to reason and to select its action accordingly?

Prior work on grasping in computer vision and robotics relies on estimating object contact points or affordance regions from visual input to guide performed grasping behavior. Such visual contact estimates do consider certain object characteristics, however do not exploit the environment. As part of this project we wish to focus in particular on the integration of environmental cues as a guidance for grasping behavior.

Sensorization for Soft Robots

Soft robotic hands can perform impressive grasping and manipulation tasks. Their inherent compliance provides robustness and safety. By adding sensors to soft robots, we can for example observe when the hand collides with something, detect if an object was successfully grasped, or tactually explore unknown environments. But the high flexibility of soft robots creates novel challenges for their sensorization. We need sensors that, on the one hand, are able to measure the highly complex deformations and interactions of soft robots. While on the other hand, being highly flexible themselves, so as not to restrict the behavior of the robot, or in the worst case break.

In our lab, we are researching several new sensor technologies that make clever use of the compliance of soft robots. For example, our liquid metal strain sensors measure the 3D shape of actuators by sensing the stretch of the actuator’s hull. And our acoustic sensor records sounds within the actuator to measure all kinds of different things, like contact locations, the material of objects, or even temperature. Ultimately, we want to provide our soft robots with all the necessary senses to successfully navigate any situation they may encounter.